In this entry I compare four public Cloud APIs (AWS EC2, GoGrid, Rackspace and Sun Cloud) to see what practical benefits REST provides for resource management protocols.

As someone who was involved with the creation of the WS-* stack (especially the parts related to resource management) and who genuinely likes the SOAP processing model I have a tendency to be a little defensive about REST, which is often defined in opposition to WS-*. On the other hand, as someone who started writing web apps when the state of the art was a CGI Perl script, who loves on-the-wire protocols (e.g. this recent exploration of the Windows management stack from an on-the-wire perspective), who is happy to deal with raw XML (as long as I get to do it with a good library), who appreciates the semantic web, and who values models over protocols the REST principles are very natural to me.

I have read the introduction and the bible but beyond this I haven’t seen a lot of practical and profound information about using REST (by “profound” I mean something that is not obvious to anyone who has written web applications). I had high hopes when Pete Lacey promised to deliver this through a realistic example, but it seems to have stalled after two posts. Still, his conversation with Stefan Tilkov (video + transcript) remains the most informed comparison of WS-* and REST.

The domain I care the most about is IT resource management (which includes “Cloud” in my view). I am familiar with most of the remote API mechanisms in this area (SNMP to WBEM to WMI to JMX/RMI to OGSI, to WSDM/WS-Management to a flurry of proprietary interfaces). I can think of ways in which some REST principles would help in this area, but they are mainly along the lines of “any consistent set of principles would help” rather than anything specific to REST. For a while now I have been wondering if I am missing something important about REST and its applicability to IT management or if it’s mostly a matter of “just pick one protocol and focus on the model” (as well as simply avoiding the various drawbacks of the alternative methods, which is a valid reason but not an intrinsic benefit of REST).

I have been trying to learn from others, by looking at how they apply REST to IT/Cloud management scenarios. The Cloud area has been especially fecund in such specifications so I will focus on this for part 1. Here is what I think we can learn from this body of work.

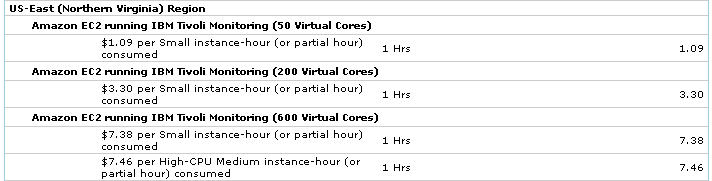

Amazon EC2

When it came out a few years ago, the Amazon EC2 API, with its equivalent SOAP and plain-HTTP alternatives, did nothing to move me from the view that it’s just a matter of picking a protocol and being consistent. They give you the choice of plain HTTP versus SOAP, but it’s just a matter of tweaking how the messages are serialized (URL parameters versus a SOAP message in the input; whether or not there is a SOAP wrapper in the output). The operations are the same whether you use SOAP or not. The responses don’t even contain URLs. For example, “RunInstances” returns the IDs of the instances, not a URL for each of them. You then call “TerminateInstances” and pass these instance IDs as parameters rather than doing a “delete” on an instance URL. This API seems to have served Amazon (and their ecosystem) well. It’s easy to understand, easy to use and it provides a convenient way to handle many instances at once. Since no SOAP header is supported, the SOAP wrapper adds no value (I remember reading that the adoption rate for the EC2 SOAP API reflect this though I don’t have a link handy).

Overall, seeing the EC2 API did not weaken my suspicion that there was no fundamental difference between REST and SOAP in the IT/Cloud management field. But I was very aware that Amazon didn’t really “do” REST in the EC2 API, so the possibility remained that someone would, in a way that would open my eyes to the benefits of true REST for IT/Cloud management.

Fast forward to 2009 and many people have now created and published RESTful APIs for Cloud computing. APIs that are backed by real implementations and that explicitly claim RESTfulness (unlike Amazon). Plus, their authors have great credentials in datacenter automation and/or REST design. First came GoGrid, then the Sun Cloud API and recently Rackspace. So now we have concrete specifications to analyze to understand what REST means for resource management.

I am not going to do a detailed comparative review of these three APIs, though I may get to that in a future post. Overall, they are pretty similar in many dimensions. They let you do similar things (create server instances based on images, destroy them, assign IPs to them…). Some features differ: GoGrid supports more load balancing features, Rackspace gives you control of backup schedules, Sun gives you clusters (a way to achieve the kind of manage-as-group features inherent in the EC2 API), etc. Leaving aside the feature-per-feature comparison, here is what I learned about what REST means in practice for resource management from each of the three specifications.

GoGrid

Though it calls itself “REST-like”, the GoGrid API is actually more along the lines of EC2. The first version of their API claimed that “the API is a REST-like API meaning all API calls are submitted as HTTP GET or POST requests” which is the kind of “HTTP ergo REST” declaration that makes me cringe. It’s been somewhat rephrased in later versions (thank you) though they still use the undefined term “REST-like”. Maybe it refers to their use of “call patterns”. The main difference with EC2 is that they put the operation name in the URI path rather than the arguments. For example, EC2 uses

https://ec2.amazonaws.com/?Action=TerminateInstances&InstanceId.1=i-2ea64347&…(auth-parameters)…

while GoGrid uses

https://api.gogrid.com/api/grid/server/delete?name=My+Server+Name&…(auth-parameters)…

So they have action-specific endpoints rather than a do-everything endpoint. It’s unclear to me that this change anything in practice. They don’t pass resource-specific URLs around (especially since, like EC2, they include the authentication parameters in the URL), they simply pass IDs, again like EC2 (but unlike EC2 they only let you delete one server at a time). So whatever “REST-like” means in their mind, it doesn’t seem to be “RESTful”. Again, the EC2 API gets the job done and I have no reason to think that GoGrid doesn’t also. My comments are not necessarily a criticism of the API. It’s just that it doesn’t move the needle for my appreciation of REST in the context of IT management. But then again, “instruct William Vambenepe” was probably not a goal in their functional spec

Rackspace

In this “interview” to announce the release of the Rackspace “Cloud Servers” API, lead architects Erik Carlin and Jason Seats make a big deal of their goal to apply REST principles: “We wanted to adhere as strictly as possible to RESTful practice. We iterated several times on the design to make it more and more RESTful. We actually did an update this week where we made some final changes because we just didn’t feel like it was RESTful enough”. So presumably this API should finally show me the benefits of true REST in the IT resource management domain. And to be sure it does a better job than EC2 and GoGrid at applying REST principles. The authentication uses HTTP headers, keeping URLs clean. They use the different HTTP verbs the way they are intended. Well mostly, as some of the logic escapes me: doing a GET on /servers/id (where id is the server ID) returns the details of the server configuration, doing a DELETE on it terminates the server, but doing a PUT on the same URL changes the admin username/password of the server. Weird. I understand that the output of a GET can’t always have the same content as the input of a PUT on the same resource, but here they are not even similar. For non-CRUD actions, the API introduces a special URL (/servers/id/action) to which you can POST. The type of the payload describes the action to execute (reboot, resize, rebuild…). This is very similar to Sun’s “controller URLs” (see below).

I came out thinking that this is a nice on-the-wire interface that should be easy to use. But it’s not clear to me what REST-specific benefit it exhibits. For example, how would this API be less useful if “delete” was another action POSTed to /servers/id/action rather than being a DELETE on /servers/id? The authors carefully define the HTTP behavior (content compression, caching…) but I fail to see how the volume of data involved in using this API necessitates this (we are talking about commands here, not passing disk images around). Maybe I am a lazy pig, but I would systematically bypass the cache because I suspect that the performance benefit would be nothing in comparison to the cost of having to handle in my code the possibility of caching taking place (“is it ok here that the content might be stale? what about here? and here?”).

Sun

Like Rackspace, the Sun Cloud API is explicitly RESTful. And, by virtue of Tim Bray being on board, we benefit from not just seeing the API but also reading in well-explained details the issues, alternatives and choices that went into it. It is pretty similar to the Rackspace API (e.g. the “controller URL” approach mentioned above) but I like it a bit better and not just because the underlying model is richer (and getting richer every day as I just realized by re-reading it tonight). It handles many-as-one management through clusters in a way that is consistent with the direct resource access paradigm. And what you PUT on a resource is closely related to what you GET from it.

I have commented before on the Sun Cloud API (though the increasing richness of their model is starting to make my comments less understandable, maybe I should look into changing the links to a point-in-time version of Kenai). It shows that at the end it’s the model, not the protocol that matters. And Tim is right to see REST in this case as more of a set of hygiene guidelines for on-the-wire protocols then as the enabler for some unneeded scalability (which takes me back to wondering why the Rackspace guys care so much about caching).

Anything learned?

So, what do these APIs teach us about the practical value of REST for IT/Cloud management?

I haven’t written code against all of them, but I get the feeling that the Sun and Rackspace APIs are those I would most enjoy using (Sun because it’s the most polished, Rackspace because it doesn’t force me to use JSON). The JSON part has two component. One is simply my lack of familiarity with using it compared to XML, but I assume I’ll quickly get over this when I start using it. The second is my concern that it will be cumbersome when the models handled get more complex, heterogeneous and versioned, chiefly from the lack of namespace support. But this is a topic for another day.

I can’t tell if it’s a coincidence that the most attractive APIs to me happen to be the most explicitly RESTful. On the one hand, I don’t think they would be any less useful if all the interactions where replaced by XML RPC calls. Where the payloads of the requests and responses correspond to the parameters the APIs define for the different operations. The Sun API could still return resource URLs to me (e.g. a VM URL as a result of creating a VM) and I would send reboot/destroy commands to this VM via XML RPC messages to this URL. How would it matter that everything goes over HTTP POST instead of skillfully choosing the right HTTP verb for each operation? BTW, whether the XML RPC is SOAP-wrapped or not is only a secondary concern.

On the other hand, maybe the process of following REST alone forces you to come up with a clear resource model that makes for a clean API, independently of many of the other REST principles. In this view, REST is to IT management protocol design what classical music training is to a rock musician.

So, at least for the short-term expected usage of these APIs (automating deployments, auto-scaling, cloudburst, load testing, etc) I don’t think there is anything inherently beneficial in REST for IT/Cloud management protocols. What matter is the amount of thought you put into it and that it has a clear on-the-wire definition.

What about longer term scenarios? Wouldn’t it be nice to just use a Web browser to navigate HTML pages representing the different Cloud resources? Could I use these resource representations to create mashups tying together current configuration, metrics history and events from wherever they reside? In other words, could I throw away my IT management console because all the pages it laboriously generates today would exist already in the ether, served by the controllers of the resources. Or rather as a mashup of what is served by these controllers. Such that my IT management console is really “in the cloud”, meaning not just running in somebody else’s datacenter but rather assembled on the fly from scattered pieces of information that live close to the resources managed. And wouldn’t this be especially convenient if/when I use a “federated” cloud, one that spans my own datacenter and/or multiple Cloud providers? The scalability of REST could then become more relevant, but more importantly its mashup-friendliness and location transparency would be essential.

This, to me, is the intriguing aspect of using REST for IT/Cloud management. This is where the Sun Cloud API would beat the EC2 API. Tim says that in the Sun Cloud “the router is just a big case statement over URI-matching regexps”. Tomorrow this router could turn into five different routers deployed in different locations and it wouldn’t change anything for the API user. Because they’d still just follow URLs. Unlike all the others APIs listed above, for which you know the instance ID but you need to somehow know which controller to talk to about this instance. Today it doesn’t matter because there is one controller per Cloud and you use one Cloud at a time. Tomorrow? As Tim says, “the API doesn’t constrain the design of the URI space at all” and this, to me, is the most compelling long-term reason to use REST. But it only applies if you use it properly, rather than just calling your whatever-over-HTTP interface RESTful. And it won’t differentiate you in the short term.

The second part in the “REST in practice for IT and Cloud management” series will be about the use of REST for configuration management and especially federation. Where you can expect to read more about the benefits of links (I mean “hypermedia”).

[UPDATE: Part 2 is now available. Also make sure to read the comments below.]