I try to keep posts on this blog relevant to the general topic of IT management. Less than 10% of messages are in the “off-topic” category and even those are usually somewhat related to computer technology (mostly rants against the misuse of Flash and against the stupid ways in which US Social Security numbers are used). What this means in practice is that off-topic drafts are often abandoned when I realize that they are not relevant enough to make the cut. My “drafts” folder is a boneyard of such entries. Today, I am relaxing my standards and subjecting you to a list of them (they are still computer-related). Hopefully, either you find at least some of them interesting, or you come out with a renewed appreciation of what you’ve been spared over the years. Since they are all in one post, they are easy to just skip it altogether without being too tempted to hit the “unsubsribe” button for those who really only want to read about IT management (at least from me).

Here is a list of the topics covered below:

Messing with a blogger’s head

I recently looked at the HTTP logs for this site. Maybe I am the last blogger to realize this, but it looks like the online blog readers (e.g. Google Reader, Bloglines…) tell you how many subscribers they have for your feed. They do this through the user-agent HTTP header, which gets logged. It looks something like this:

Feedfetcher-Google; (+http://www.google.com/feedfetcher.html; 102 subscribers; feed-id=…)

Of course that’s only on a per-feed basis, so you need to add all the feeds (Atom and the different RSS versions) to get a total. Still, it’s a lot more visibility than I had before.

My first thought was “hey, some people are reading, better watch what I write”. But I quickly discarded that in favor of a more intriguing idea: if bloggers use this data, how hard would it be to mess with their heads? After all, this is not verifiable. Anyone can send HTTP requests with any user-agent they want. I can pick a blog and starts sending HTTP GET requests on their feeds with a user agent that pretends to be “Feedfetcher-Google”. And I can set the “subscribers” number to anything I want. To not be too suspicious, I could slowly pump it up, to look like a realistic increase.

Of course, an alert blogger would probably smell a rat if the number of subscribers shoots up and the number of incoming links and comments didn’t change, if the site still didn’t show up near the top of Google searches, or if the technorati “authority” didn’t change. Etc. There are pleny to ways to reality-test this. But people have an amazing ability to suspend disbelief when they like what they see, however logic-defying. If you don’t believe me, I have a pile of mortgage-backed securities to sell you.

This stat-pumping experiment could be done as a practical joke. It could be done out of meanness. It could be done as an unethical and pointless sociological study (how many subscribers does it take for someone to go buy a Porsche on the assumption that the traffic will eventually turn into $$$, how does the impression of popularity change the writing on the blog…). It could even be done as a fraud (guaranteed increase in your subscription numbers if you sign up for my blog marketing service or you get your money back: just check your logs to see the results… – of course you could also generate fake users to create real subscriptions). It hits bloggers where they are the most vulnerable: the ego.

If you are thinking of doing this as a way to be nice to someone who needs encouragements, it will probably backfire. Before you process, listen to act two of this radio show (description: “A group called Improv Everywhere decides that an unknown band, Ghosts of Pasha, playing their first ever tour in New York, ought to think they’re a smash hit. So they study the band’s music and then crowd the performance, pretending to be hard-core fans. Improv Everywhere just wants to make the band happy — to give them the best day of their lives. But the band doesn’t see it that way.”)

Google search suggestions

When you enter a Google search query (on google.com or in the Firefox search bar), as soon as you’ve typed a few characters it proposes to complete your search terms (BTW, it’s not just Google, it is now an well-know extension to OpenSearch but Google pioneered it, at least according to the spec). Something about this just doesn’t sound right. If you think you know what I am looking for, why not propose the most likely answers rather than trying to complete my search request? If you get it right, then I’ll stop typing and I’ll click. Plus, Google already concentrates viewers on a small set of pages for each search query, with this feature won’t they compound this by concentrating people to a smaller set of queries, further shrinking the Web?

Since Google feels free to give me plenty of unsolicited suggestions, here is mine to them. If you are going to hand-held people as they write their queries, provide suggestions that desambiguate rather than suggestions that overly constraint. For example, if I type “python”, I get these suggestions:

“python tutorial”, “python list”, “python strong”, “python ide”, “python download”, “python for loop”, “python datetime”, “python re”, “python time”, “python os”, all clearly about the programming language. Wouldn’t it be more useful to detect algorithmically that results from searching on “python” fall into three largely disjoint groups, to detect a common word in each group and to ask the user to qualify their “python” request with either “programming”, “snake” or “monty”? Rather than the simpler but, in my opinion, less valuable approach of showing the most popular search queries that start with “python”?

On the other hand, this “most popular” feature has one benefit: it provides plenty of fodder for pop psychology, as I found out when tried to ask Google why they provide these search suggestions. As soon as I typed “why”, I got suggestions including “why men cheat” and “why did I get married”.

The part I like about all this, is the meta-meta aspect. Google doesn’t only suggest what you might want to read based on your search, they even suggest what you might want to search on. What’s the next meta level? Suggesting that you want to do a web search when you’re not even thinking of doing one? You can bet they will if they can. What a butler indeed.

Google to navigate rather than search

Still on the topic of Google, but a positive comment this time. It struck me one day that pretty much every single bookmark I have in Firefox is for an Oracle-internal site, not the public Web. After thinking about it for a minute, I realized the reason: Google doesn’t index the Oracle intranet. When I find a good page there, I can’t be sure I’ll be able to find it again easily, so I bookmark it. On the Web, on the other hand, why bother bookmarking it. I pretty much know I can find it from my Firefox search bar.

Most of the time, when I use Google, it’s not to find a new page. It’s to get back to a specific page. Case in point, when I want to look something up in the XPath spec (which I have done a few times lately in the context a CMDBf). I know it’s on the W3C web site, I could go there and navigate to the page in a few clicks. I also have a copy of it on my disk, I could open my file explorer and get it from there. But instead I just type “xpath” in Google. Again, I am not looking really “searching” (trying to find information about XPath), I am just navigating (finding my way back to the spec).

So I started a post to share this brilliant insight, at which point I saw (using Google in “search” mode for once) that Robin Cannon has already perfectly described it.

So I’ll just add a few thoughts to complement what Robin wrote:

- I am sure the implication in terms of advertising have long been studied by Google (I would guess that people who use Google for navigation are a lot less likely to click on ads than those who are actually searching).

- AOL had to die for the “AOL keyword” to live.

- There are serious privacy aspects to letting Google know what you’re up to all the time (but I am not logged into Google, I clean up my cookie jar relatively often and, at least at work, I am behind a large enough firewall to have a mostly anonymized IP).

- Somewhat ironically, there a potential security benefits. For example, the HP employee credit union is called “Addison Avenue credit union”. Googling for “addison avenue” gets you right there. If you mistype the name and ask for “adison avenue”, you get a suggestion that maybe you meant “addison avenue”, along with a list of links related to “madison avenue”. That’s enough data to realize and correct your mistake. On the other hand, directly typing adisonavenue.com into the navigation bar could have taken you to a spoof site (in reality it takes you to a link farm, not quite as bad, but you never know what it will turn into tomorrow).

BTW, am I the only one who doesn’t know what 2 of the top 3 “Google Fastest Rising Search Terms 2007” relate to (from the list in Robin’s post)?

What is a computer

It started with this New Scientist article: Ten weirdest computers. With all these examples, how do we define what a computer is? Fundamentally, it’s a physical system that can process data. Meaning that you can define a logical data model that can be mapped to the physical characteristics of the system. And the system is such that it (through the laws of physics) changes in such a way that after a time its new physical configuration represents data that corresponds to a calculation that took place on your original data. You get the resulting data by measuring physical characteristics of the system (not necessarily the same physical characteristics that you controlled to represent the input data) and deriving the result data from it. In short, to use a computer:

- Step 1: you create a system that represents your input data

- Step 2: you let the laws of physics “do their thing” on the sytem

- Step 3: you measure the system to derive your output data

For example, take a spring scale and a bunch of 1kg weights. That’s a computer. At least it can add (within a given range). To calculate “4+8” you put four 1kg weights on the scale, then you put eight more, then you read the number next to the needle and it should tell you “12”. This is an example in which the physical characteristics that you use to provide input data (putting weights on the scale) is different in nature from the physical characteristics that you measure to get the output (the position of the needle, which is really a way to measure the compression of the spring in the scale).

Based on this, we can ask the next (and more practically useful) question: what makes a *good* computer? It has the following characteristics:

- easy to set up

- easy to measure results at needed precision level

- not too many side effects (e.g. energy consumption)

- fast and versatile (planting a pine tree seed and waiting for a pine cone to come out in order to calculate a Fibonacci sequence is a little too slow and too specialized)

- able to process large amounts of data (that’s where the mechanical scale doesn’t… scale).

On that last topic, there are two ways to process large amounts of data. The way used by current computers is to process little at once but very fast and in a way that makes it very easy to use the output of one operation as input to the next one. The alternative would be to compute a large problem in one go of the physical system. For example, maybe one day we’ll know how to represent a mathematical problem in DNA form, such that we know that the solution to the problem corresponds to the DNA sequence most useful to a bacteria in a given environment, e.g. most likely to resist a given antibiotic. Setting up the computation system, in this case, would be engineering the antibiotic that selects for the problem’s solution. You can put that antibiotic in your Petri dish (or in the food of your 1000 cows, now that’s a “computer farm”), wait for a few days, then sequence the DNA of the bacteria that’s in the dish (or in your cow’s “output” matter, think of it as a “core dump”).

You can think of it as the RISC versus CISC debate, except with many more orders of magnitude in difference between the alternatives.

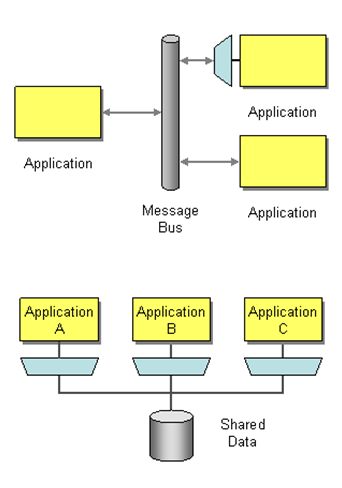

It is also interesting to note that networks and storage mechanisms (the other two consitutive elements of a data center, along with computers) can be thought of in a very similar way. If step 2 doesn’t change the data and can be made to last long enough, you have a storage system (e.g. engrave text on stone, store stone for a few thousand years, read text from stone). If instead of being far apart in time the locations in which you perform steps 1 and 3 are far apart in space (with 2 still not changing the data), then you have a networking system.

Is this a site or a feed

Like 99% of the blogs out there, this site is just an HTML rendition of an RSS (or Atom) feed. Isn’t it a little silly to have millions of Web site (visited by humans) that have their structure dictated by a machine-to-machine protocol? It is especially ironic on a site like mine, which occasionally talks about data models and protocols (and on which you would therefore expect the difference between the two to be understood). But no. Every time a new release of CMDBf comes out, for example, I create a new post with an updated version of the pseudo-algorithm for performing a graph query. Rather than having one page that gets updated (with potentially a “history” feature to access older versions).

As much as I’d like to blame the limitations of WordPress, I think it’s more a sign of my laziness. There are plenty of WordPress extensions that I have never considered. Or I could move to Drupal. The key question is, is there a way to get a site that is more useful as a unit (“show me what information William provides on his site”), while keeping the value of the feed (“tell me when William adds new content”) and not adding to my workload?