Let’s start with the disclosures: by most interpretations I work for a competitor to what Salesforce.com and VMWare are trying to do with VMforce. And all I know about VMforce is what I read in a few authoritative blogs by VMWare’s Steve Herrod, VMWare/SpringSource’s Rod Johnson and Salesforce’s Anshu Sharma. So no hard feeling if you jump off right now.

Overall, I like what I see. Let me put it this way. I am now a lot more likely to write an application on force.com than I was last week. How could this not be a good thing for SalesForce, me and others like me?

On the other hand, this is also not the major announcement that the “VMforce is coming” drum-roll had tried to make us expect. If you fell for it, then I guess you can be disappointed. I didn’t and I’m not (Phil Wainewright fell for it and yet isn’t disappointed, asserting that “VMforce.com redefines the PaaS landscape” for reasons not entirely clear to me even after reading his article).

The new thing is that force.com now supports an additional runtime, in addition to Apex. That new runtime uses the Java language, with the constraint that it is used via the Spring framework. Which is familiar territory to many developers. That’s it. That’s the VMforce announcement for all practical purposes from a user’s perspective. It’s a great step forward for force.com which was hampered by the non-standard nature of Apex, but it’s just a new runtime. All the other benefits that Anshu Sharma lists in his blog (search, reporting, mobile, integration, BPM, IdM, administration) are not new. They are the platform services that force.com offers to application writers, whether they use Apex or the new Java/Spring runtime.

It’s important to realize that there are two main parts to a full PaaS platform like force.com or Google App Engine. First there are application runtimes (Apex and now Java for force.com, Python and Java for GAE). They are language-dependent and you can have several of them to support different programming languages. Second are the platform services (reports, mobile, BPM, IdM etc for force.com as we saw above, mostly IdM for Google at this point) which are mostly language agnostic (beyond a library used to access them). I think of data storage (e.g. mySQL, force.com database, Google DataStore) as part of the runtime, but it’s on the edge of the grey zone. A third category is made of actual application services (e.g. the CRM web services out of SalesForce.com or the application services out of Google Apps) which I tend not to consider part of PaaS but again there are gray zones between application support services and application services. E.g. how domain-specific does your rule engine have to be before it moves from one category to the other?

As Umit Yalcinalp (who works for SalesForce) told me on Twitter “regardless of the runtime the devs using the Force.com db will get the same platform benefits, chatter, workflow, analytics”. What I called the platform services above. Which, really, is where most of the PaaS value lies anyway. A language runtime is just a starting point.

So where are VMWare and SpringSource in this picture? Well, from the point of view of the user nowhere, really. SalesForce could have built this platform themselves, using the Spring framework on top of Tomcat, WebLogic, JBoss… Itself running on any OS they want. With or without a hypervisor. These are all implementation details and are SalesForce’s problem, not ours as application developers.

It so happens that they have chosen to run this as a partnership with VMWare/SpringSource which makes a lot of sense from a portfolio/expertise perspective, of course. But this choice is not visible to the application developer making use of this platform. And it shouldn’t be. That’s the whole point of PaaS after all, that we don’t have to care.

But VMWare and SpringSource really want us to know that they are there, so Rod Johnson leads by lifting the curtain and explaining that:

“VMforce uses the Force.com physical infrastructure to run vSphere with a special customized vCloud layer that allows for seamless scaling and management. Above this layer VMforce runs SpringSource tc Server instances that provide the execution environment for the enterprise applications that run on VMforce.”

[Side note: notice what’s missing? The operating system. It’s there of course, most likely some Linux distribution but Rod glances over it, maybe because it’s a missing link in VMWare’s “we have all the pieces” story; unlike Oracle who can provide one or, even better, do without. Just saying…]

VMWare wants us to know they are under the covers because of course they have much larger aspirations than to be a provider to SalesForce. They want to use this as a proof point to sell their SpringSource+VMWare stack in other settings, such as private clouds and other public cloud providers (modulo whatever exclusivity period may be in their contract with SalesForce). And VMforce, if it works well when it launches, is a great validation for this strategy. It’s natural that they want people to know that they are behind the curtain and can be called on to replicate this elsewhere.

But let’s be clear about what part they can replicate. It’s the Java/Spring language runtime and its underlying infrastructure. Not the platform services that are part of the SalesForce platform. Not an IdM solution, not a rules engine, not a business process engine, etc. We can expect that they are hard at work trying to fill these gaps, as the RabbitMQ acquisition illustrates, but for now all this comes from force.com and isn’t directly replicable. Which means that applications that use them aren’t quite so portable.

In his post, Steve Herrod quickly moves past the VMforce announcement to focus on the SpringSource+VMWare infrastructure part, the one he hopes to see multiplied everywhere. The key promise, from the developers’ perspective, is application portability. And while the use of Java+Spring definitely helps a lot in terms of code portability I see some promises in terms of data portability that will warrant scrutiny when VMforce actually rolls out: “you should be able to extract the code from the cloud it currently runs in and move it, along with its data, to another cloud choice”.

It sounds very nice, but the underlying issues are:

- Does the code change depending on whether I am talking to a local relational DB in my private cloud or whether I am on VMforce and using the force.com database?

- If it doesn’t then the application is portable, but an extra service i still needed to actually move the data from one cloud to the other (can this be done in-flight? what downtime is needed?)

- What about the other VMforce.com services (chatter, workflow, analytics…)? If I use them in my code can I keep using them once I migrate out of VMforce to a private cloud? Are they remotely invocable? Does the code change? And if I want to completely sever my links with SalesForce, can I find alternative implementations of these application platform services in my private cloud? Or from another public cloud provider? The answer to these is probably no, which means that you are only portable out of VMforce if you restrain yourself from using much of the value of the platform. It’s not even clear whether you can completely restrain yourself from using it, e.g. can you run on force.com without using their IdM system?

All these are hard questions. I am not blaming anyone for not answering them today since no-one does. But we shouldn’t sweep them under the rug. I am sure VMWare is working on finding workable compromises but I doubt it will be as simple, clean and portable as Steve Herrod implies. It’s funny how Steve and Anshu’s posts seem to reinforce and congratulate one another, until you realize that they are in large part talking about very different things. Anshu’s is almost entirely about the force.com application platform services (sprinkled with some weird Facebook envy), Steve’s is entirely about the application runtime and its infrastructure.

One thing that I am surprised not to see mentioned is the management aspect of the platform, especially considering the investment that SpringSource made in Hyperic. I can only assume that work is under way on this and that we’ll hear about it soon. One aspect of the management story that concerns me a bit is the lack of acknowledgment of the challenges of configuration management in a PaaS setting. Especially when I read Steve Herrod asserting that the VMWare/SpringSource PaaS platform is going to free us from the burden of “handling code modifications that may be required as the middleware versions change”. There seems to be a misconception that because the application administrators are not the ones doing the infrastructure updates they don’t need to worry about the impact of these updates on their application. Is Steve implying that the first release of the VMWare/SpringSource PaaS stack is going to be so perfect that the hypervisor, guest OS and app server will never have to be patched and versioned? If that’s not the case, then why are those patches suddenly less likely impact the application code? In fact the situation is even worse as the application administrator does not know which hypervisor/OS/middleware patches are being applied and when. They can’t test against the new version ahead of time for validation and they can’t make sure the change is scheduled during a non-critical period for their business. I wrote an entire blog post on this issue six months ago and it’s a little bit disheartening to see the issue flatly denied and ignored. Management is not just monitoring.

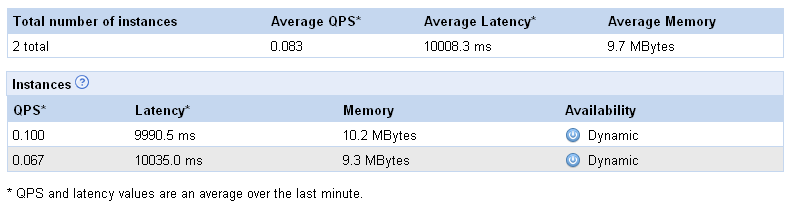

Here is another intriguing comment in Steve’s entry: “one of the key differentiators with EC2 based PaaS will be the efficiencies for the many-app model. Customers are frustrated with the need to buy a whole VM as the minimum service unit for their applications. Our PaaS will provide fine-grained resource separation”. I had to read it twice when I realized that the VMWare CTO was telling us that splitting a physical machine into VMs is not a good enough way to share its resources and that you really need middleware-level multi-tenancy. But who can disagree that a GAE-like architecture can support more low-traffic applications on the same server than anything based on VM-based sharing? Which (along with deep pockets) puts Google in position to offer free hosting for low-traffic applications, a great way to build adoption.

These are very early days in the history of PaaS. VMWare, like the rest of us, will need to tackle all these issues one by one. In the meantime, this is an interesting announcement and a noticeable milestone. Let’s just keep our eyes open on the incremental nature of progress and the long list of remaining issues.

[UPDATED 2010/4/29: See the follow-up post, PaaS portability challenges and the VMforce example.]

[UPDATED 2010/6/9: This entry points out how the OS level is a gap in VMWare’s portfolio. They took a step in addressing this today, by partnering with Novell to offer SUSE support.]

yalcinalp