Category Archives: Manageability

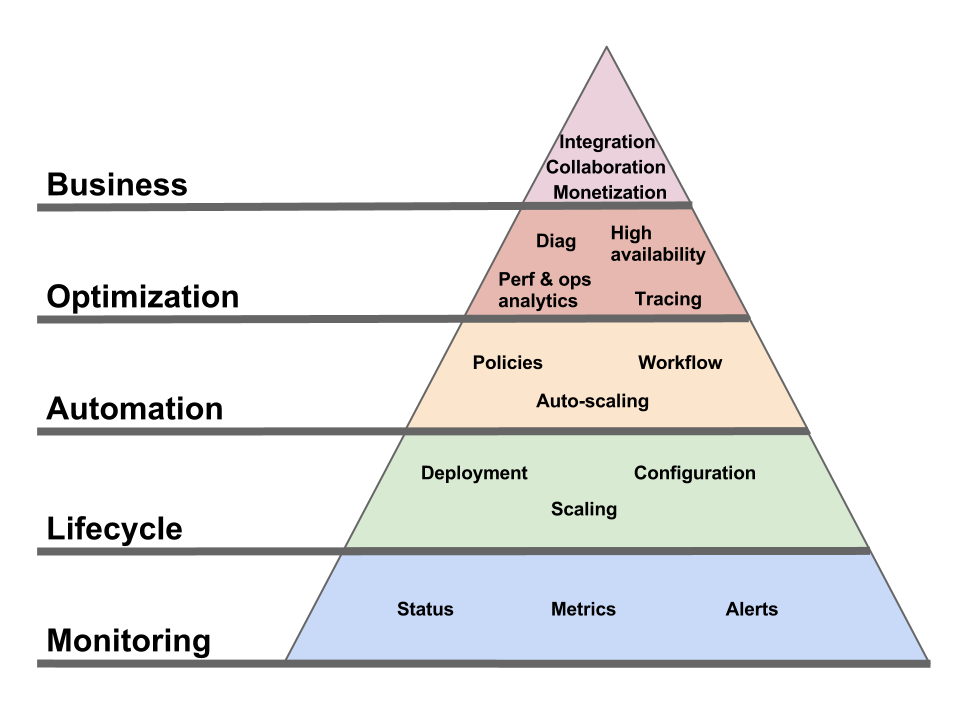

Pyramid of needs for Cloud management

Comments Off on Pyramid of needs for Cloud management

Filed under Application Mgmt, Automation, Business, Cloud Computing, Everything, Governance, IT Systems Mgmt, Manageability, Utility computing

Come for the PaaS Functional Model, stay for the Cloud Operational Model

The Functional Model of PaaS is nice, but the Operational Model matters more.

Let’s first define these terms.

The Functional Model is what the platform does for you. For example, in the case of AWS S3, it means storing objects and making them accessible via HTTP.

The Operational Model is how you consume the platform service. How you request it, how you manage it, how much it costs, basically the total sum of the responsibility you have to accept if you use the features in the Functional Model. In the case of S3, the Operational Model is made of an API/UI to manage it, a bill that comes every month, and a support channel which depends on the contract you bought.

The Operational Model is where the S (“service”) in “PaaS” takes over from the P (“platform”). The Operational Model is not always as glamorous as new runtime features. But it’s what makes Cloud Cloud. If a provider doesn’t offer the specific platform feature your application developers desire, you can work around it. Either by using a slightly-less optimal approach or by building the feature yourself on top of lower-level building blocks (as Netflix did with Cassandra on EC2 before DynamoDB was an option). But if your provider doesn’t offer an Operational Model that supports your processes and business requirements, then you’re getting a hipster’s app server, not a real PaaS. It doesn’t matter how easy it was to put together a proof-of-concept on top of that PaaS if using it in production is playing Russian roulette with your business.

If the Cloud Operational Model is so important, what defines it and what makes a good Operational Model? In short, the Operational Model must be able to integrate with the consumer’s key processes: the business processes, the development processes, the IT processes, the customer support processes, the compliance processes, etc.

To make things more concrete, here are some of the key aspects of the Operational Model.

Deployment / configuration / management

I won’t spend much time on this one, as it’s the most understood aspect. Most Clouds offer both a UI and an API to let you provision and control the artifacts (e.g. VMs, application containers, etc) via which you access the PaaS functional interface. But, while necessary, this API is only a piece of a complete operational interface.

Support

What happens when things go wrong? What support channels do you have access to? Every Cloud provider will show you a list of support options, but what’s really behind these options? And do they have the capability (technical and logistical) to handle all your issues? Do they have deep expertise in all the software components that make up their infrastructure (especially in PaaS) from top to bottom? Do they run their own datacenter or do they themselves rely on a customer support channel for any issue at that level?

SLAs

I personally think discussions around SLAs are overblown (it seems like people try to reduce the entire Cloud Operational Model to a provisioning API plus an SLA, which is comically simplistic). But SLAs are indeed part of the Operational Model.

Infrastructure change management

It’s very nice how, in a PaaS setting, the Cloud provider takes care of all change management tasks (including patching) for the infrastructure. But the fact that your Cloud provider and you agree on this doesn’t neutralize Murphy’s law any more than me wearing Michael Jordan sneakers neutralizes the law of gravity when I (try to) dunk.

In other words, if a patch or update is worth testing in a staging environment if you were to apply it on-premise, what makes you think that it’s less likely to cause a problem if it’s the Cloud provider who rolls it out? Sure, in most cases it will work just fine and you can sing the praise of “NoOps”. Until the day when things go wrong, your users are affected and you’re taken completely off-guard. Good luck debugging that problem, when you don’t even know that an infrastructure change is being rolled out and when it might not even have been rolled out uniformly across all instances of your application.

How is that handled in your provider’s Operational Model? Do you have visibility into the change schedule? Do you have the option to test your application on the new infrastructure or to at least influence in any way how and when the change gets rolled out to your instances?

Note: I’ve covered this in more details before and so has Chris Hoff.

Diagnostic

Developers have assembled a panoply of diagnostic tools (memory/thread analysis, BTM, user experience, logging, tracing…) for the on-premise model. Many of these won’t work in PaaS settings because they require a console on the local machine, or an agent, or a specific port open, or a specific feature enabled in the runtime. But the need doesn’t go away. How does your PaaS Operational Model support that process?

Customer support

You’re a customer of your Cloud, but you have customers of your own and you have to support them. Do you have the tools to react to their issues involving your Cloud-deployed application? Can you link their service requests with the related actions and data exposed via your Cloud’s operational interface?

Security / compliance

Security is part of what a Cloud provider has to worry about. The problem is, it’s a very relative concept. The issue is not what security the Cloud provider needs, it’s what security its customers need. They have requirements. They have mandates. They have regulations and audits. In short, they have their own security processes. The key question, from their perspective, is not whether the provider’s security is “good”, but whether it accommodates their own security process. Which is why security is not a “trust us” black box (I don’t think anyone has coined “NoSec” yet, but it can’t be far behind “NoOps”) but an integral part of the Cloud Operational Model.

Business management

The oft-repeated mantra is that Cloud replaces capital expenses (CapExp) with operational expenses (OpEx). There’s a lot more to it than that, but it surely contributes a lot to OpEx and that needs to be managed. How does the Cloud Operational Model support this? Are buyer-side roles clearly identified (who can create an account, who can deploy a service instance, who can manage a deployed instance, etc) and do they map well to the organizational structure of the consumer organization? Can charges be segmented and attributed to various cost centers? Can quotas be set? Can consumption/cost projections be run?

We all (at least those of us who aren’t accountants) love a great story about how some employee used a credit card to get from the Cloud something that the normal corporate process would not allow (or at too high a cost). These are fun for a while, but it’s not sustainable. This doesn’t mean organizations will not be able to take advantage of the flexibility of Cloud, but they will only be able to do it if the Cloud Operational Model provides the needed support to meet the requirements of internal control processes.

Conclusion

Some of the ways in which the Cloud Operational Model materializes can be unexpected. They can seem old-fashioned. Let’s take Amazon Web Services (AWS) as an example. When they started, ownership of AWS resources was tied to an individual user’s Amazon account. That’s a big Operational Model no-no. They’ve moved past that point. As an illustration of how the Operational Model materializes, here are some of the features that are part of Amazon’s:

- You can Fedex a drive and have Amazon load the data to S3.

- You can optimize your costs for flexible workloads via spot instances.

- The monitoring console (and API) will let you know ahead of time (when possible) which instances need to be rebooted and which will need to be terminated because they run on a soon-to-be-decommissioned server. Now you could argue that it’s a limitation of the AWS platform (lack of live migration) but that’s not the point here. Limitations exists and the role of the Operational Model is to provide the tools to handle them in an acceptable way.

- Amazon has a program to put customers in touch with qualified System Integrators.

- You can use your Amazon support channel for questions related to some 3rd party software (though I don’t know what the depth of that support is).

- To support your security and compliance requirements, AWS support multi-factor authentication and has achieved some certifications and accreditations.

- Instance status checks can help streamline your diagnostic flows.

These Operational Model features don’t generate nearly as much discussion as new Functional Model features (“oh, look, a NoSQL AWS service!”) . That’s OK. The Operational Model doesn’t seek the limelight.

Business applications are involved, in some form, in almost every activity taking place in a company. Those activities take many different forms, from a developer debugging an application to an executive examining operational expenses. The PaaS Operational Model must meet their needs.

Everything is PaaSible

That’s the title of an article I wrote for InfoQ and which went live today.

If you can get past the punny title you’ll read about the following points:

- In traditional (and IaaS) environments, many available application infrastructure features remain rarely used because of the cost (perceived or real) or adding them to the operational environment.

- Most PaaS environments of today don’t even let you make use of these features, at any cost, because of constraints imposed by PaaS providers for the sake of simplifying and streamlining their operations.

- In the future, PaaS will not only make these available but available at a negligible incremental operational cost.

- Even beyond that, PaaS will make available application services that are, in traditional settings, completely out of scope for the application programmer. Early examples include CDN, DNS and loab balancing services offered, for example, by Amazon. An application developer in most traditional data centers would have to jump through endless hoops if she wanted to control these services within the application. I believe that these network-related services are just the low hanging fruits and many more once-unthinkable infrastructure services will become programmable as part of the application.

PaaS will become less about “hosting” and more about offering application services. In other words, going back to the formula I proposed on Twitter:

Cloud = Hosting + SOA

IaaS is a lot more “hosting” than SOA, PaaS is a lot more “SOA” (application infrastructure services available via APIs) than “hosting”.

You can read the full article for more.

Comments Off on Everything is PaaSible

Filed under API, Application Mgmt, Articles, Cloud Computing, Everything, Manageability, Mgmt integration, Middleware, PaaS, Utility computing

DMTF publishes draft of Cloud API

Note to anyone who still cares about IaaS standards: the DMTF has published a work in progress.

There was a lot of interest in the topic in 2009 and 2010. Some heated debates took place during Cloud conferences and a few symposiums were organized to try to coordinate various standard efforts. The DMTF started an “incubator” on the topic. Many companies brought submissions to the table, in various levels of maturity: VMware, Fujitsu, HP, Telefonica, Oracle and RedHat. IBM and Microsoft might also have submitted something, I can’t remember for sure.

The DMTF has been chugging along. The incubator turned into a working group. Unfortunately (but unsurprisingly), it limited itself to the usual suspects (and not all the independent Cloud experts out there) and kept the process confidential. But this week it partially lifted the curtain by publishing two work-in-progress documents.

They can be found at http://dmtf.org/standards/cloud but if you read this after March 2012 they won’t be there anymore, as DMTF likes to “expire” its work-in-progress documents. The two docs are:

- Cloud Infrastructure Management Interface (CIMI) Model and REST Interface Specification, and

- Cloud Infrastructure Management Interface – Common Information Model (CIMI-CIM) Specification

The first one is the interesting one, and the one you should read if you want to see where the DMTF is going. It’s a RESTful specification (at the cost of some contortions, e.g. section 4.2.1.3.1). It supports both JSON and XML (bad idea). It plans to use RelaxNG instead of XSD (good idea). And also CIM/MOF (not a joke, see the second document for proof). The specification is pretty ambitious (it covers not just lifecycle operations but also monitoring and events) and well written, especially for a work in progress (props to Gil Pilz).

I am surprised by how little reaction there has been to this publication considering how hotly debated the topic used to be. Why is that?

A cynic would attribute this to people having given up on DMTF providing a Cloud API that has any chance of wide adoption (the adjoining CIM document sure won’t help reassure DMTF skeptics).

To the contrary, an optimist will see this low-key publication as a sign that the passions have cooled, that the trusted providers of enterprise software are sitting at the same table and forging consensus, and that the industry is happy to defer to them.

More likely, I think people have, by now, enough Cloud experience to understand that standardizing IaaS APIs is a minor part of the problem of interoperability (not to mention the even harder goal of portability). The serialization and plumbing aspects don’t matter much, and if they do to you then there are some good libraries that provide mappings for your favorite language. What matters is the diversity of resources and services exposed by Cloud providers. Those choices strongly shape the design of your application, much more than the choice between JSON and XML for the control API. And nobody is, at the moment, in position to standardize these services.

So congrats to the DMTF Cloud Working Group for the milestone, and please get the API finalized. Hopefully it will at least achieve the goal of narrowing down the plumbing choices to three (AWS, OpenStack and DMTF). But that’s not going to solve the hard problem.

Yoga framework for REST-like partial resource access

A tweet by Stefan Tilkov brought Yoga to my attention, “a framework for supporting REST-like URI requests with field selectors”.

As the name suggests, “Yoga” lets you practice some contortions that would strain a run-of-the-mill REST programmer. Basically, you can use a request like

GET /teams/4234.json?selector=:(members:(id,name,birthday)

to retrieve the id, name and birthday of all members of a softball team, rather than having to retrieve the team roaster and then do a GET on each and every team member to retrieve their name and birthday (and lots of other information you don’t care about).

Where have I seen this before? That use case came up over and over again when we were using SOAP Web services for resource management. I have personally crafted support for it a few times. Using this blog to support my memory, here is the list of SOAP-related management efforts listed in the “post-mortem on the previous IT management revolution”:

WSMF, WS-Manageability, WSDM, OGSI, WSRF, WS-Management, WS-ResourceTransfer, WSRA, WS-ResourceCatalog, CMDBf

Each one of them supports this “partial access” use case: WS-Management has :

WSMF, WS-Manageability, WSDM, OGSI, WSRF, WS-Management, WS-ResourceTransfer, WSRA, WS-ResourceCatalog, CMDBf

Each one of them supports this “partial access” use case: WS-Management has SelectorSet, WSRF has ResourceProperties, CMDBf has ContentSelector, WSRA has Fragments, etc.

Years ago, I also created the XMLFrag SOAP header to attack a more general version of this problem. There may be something to salvage in all this for people willing to break REST orthodoxy (with the full knowledge of what they gain and what they loose).

I’m not being sarcastic when I ask “where have I seen this before”. The problem hasn’t gone away just because we failed to solve it in a pragmatic way with SOAP. If the industry is moving towards HTTP+JSON then we’ll need to solve it again on that ground and it’s no surprise if the solution looks similar.

I have a sense of what’s coming next. XPath-for-JSON-over-the-wire. See, getting individual properties is nice, but sometimes you want more. You want to select only the members of the team who are above 14 years old. Or you just want to count these members rather than retrieve specific information about them individually. Or you just want a list of all the cities they live in. Etc.

But even though we want this, I am not convinced (anymore) that we need it.

What I know we need is better support for graph queries. Kingsley Idehen once provided a good explanation of why that is and how SPARQL and XML query languages (or now JSON query languages) complement one another (wouldn’t that be a nice trifecta: RDF/OWL’s precise modeling, JSON’s friendly syntax and SPARQL’s graph support – but I digress).

Going back to partial resource access, the last feature is the biggie: a fine-grained mechanism to update resource properties. That one is extra-hard.

Comments on “The Good, the Bad, and the Ugly of REST APIs”

A survivor of intimate contact with many Cloud APIs, George Reese shared his thoughts about the experience in a blog post titled “The Good, the Bad, and the Ugly of REST APIs“.

Here are the highlights of his verdict, with some comments.

“Supporting both JSON and XML [is good]”

I disagree: Two versions of a protocol is one too many (the post behind this link doesn’t specifically discuss the JSON/XML dichotomy but its logic applies to that situation, as Tim Bray pointed out in a comment).

“REST is good, SOAP is bad”

Not necessarily true for all integration projects, but in the context of Cloud APIs, I agree. As long as it’s “pragmatic REST”, not the kind that involves silly contortions to please the REST police.

“Meaningful error messages help a lot”

True and yet rarely done properly.

“Providing solid API documentation reduces my need for your help”

Goes without saying (for a good laugh, check out the commenter on George’s blog entry who wrote that “if you document an API, you API immediately ceases to have anything to do with REST” which I want to believe was meant as a joke but appears written in earnest).

“Map your API model to the way your data is consumed, not your data/object model”

Very important. This is a core part of Humble Architecture.

“Using OAuth authentication doesn’t map well for system-to-system interaction”

Agreed.

“Throttling is a terrible thing to do”

I don’t agree with that sweeping statement, but when George expands on this thought what he really seems to mean is more along the lines of “if you’re going to throttle, do it smartly and responsibly”, which I can’t disagree with.

“And while we’re at it, chatty APIs suck”

Yes. And one of the main causes of API chattiness is fear of angering the REST gods by violating the sacred ritual. Either ignore that fear or, if you can’t, hire an expensive REST consultant to rationalize a less-chatty design with some media-type black magic and REST-bless it.

Finally George ends by listing three “ugly” aspects of bad APIs (“returning HTML in your response body”, “failing to realize that a 4xx error means I messed up and a 5xx means you messed up” and “side-effects to 500 errors are evil”) which I agree on but I see those as a continuation of the earlier point about paying attention to the error messages you return (because that’s what the developers who invoke your API will be staring at most of the time, even if they represents only 0.01% of the messages you return).

What’s most interesting is what’s NOT in George’s list. No nit-picking about REST purity. That tells you something about what matters to implementers.

If I haven’t yet exhausted my quota of self-referential links, you can read REST in practice for IT and Cloud management for more on the topic.

Filed under API, Cloud Computing, Everything, Implementation, Manageability, Mgmt integration, Modeling, Protocols, REST, SOAP, Specs, Tech

Nice incremental progress in Google App Engine SDK 1.4

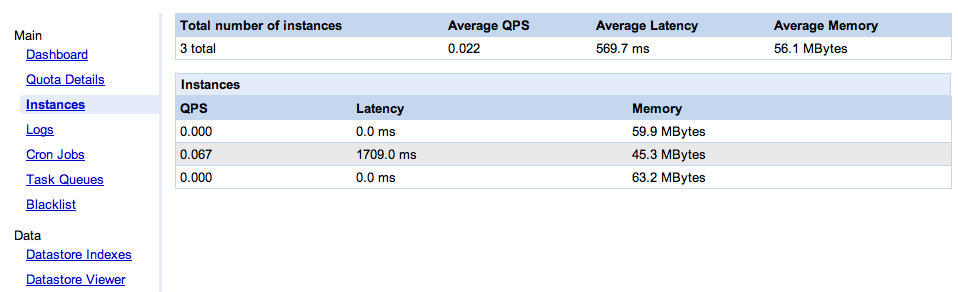

When Google released version 1.3.8 of the Google App Engine SDK in October, they introduced an instance console, showing you how many instances are serving your application and some basic metrics about these instances. I wrote a blog to consider the implications of providing this level of visibility to application administrators. It also pointed out some shortcomings of this first version of the console.

The most glaring problem was that the console showed an “average latency” which was just a straight average of the latencies of all the instances, independently of the traffic they see. Which is a meaningless number.

Today, Google released an update to the SDK (1.4), and along with it some minor updates to the instance console. Except that, as you can see below, the screen capture in their announcement happens to show three instances that have processed exactly the same number of messages. Which means that we can’t tell whether they have fixed the “unweighted average” problem or not. Is this just by chance? Google, WTF? (which stands for “what’s the formula?”, of course).

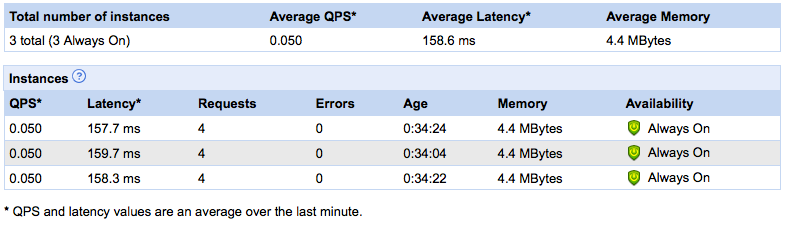

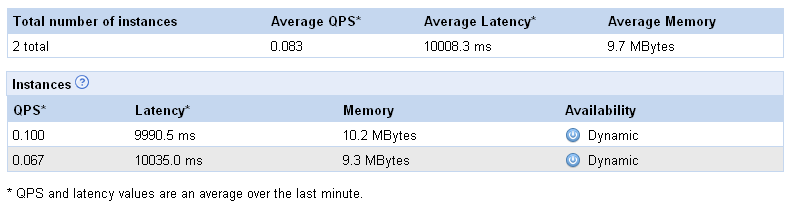

I decided it was worth spending a few minutes to find the answer. I don’t have any app currently in use on GAE, but it doesn’t take much work to generate enough load to wake up one of my old apps and get it to spin a couple of instances. Here is the resulting console instance:

If you run the numbers, you can see that they’ve fixed that issue; the average latency is now weighted based on instance traffic. Thanks Google for listening.

Apparently, not all the updates have trickled down to my version of the instance console. The “requests”, “errors” and “age” columns are missing. I assume they’re on their way. Seeing the age of the instances, especially, is a nice addition, one of those I requested in my blog.

In the grand scheme of things, these minor updates to the console (which remains quite basic) are not the big news. The major announcement with SDK 1.4 is that the dreaded 30 seconds limit on execution time has been lifted for background tasks (those from Task Queue and Cron). It’s now a much more manageable 10 minutes. This doesn’t apply to the execution of Web requests served by your app.

Google App Engine has been under criticism recently, and that 30-second limit (along with reliability issues) figured prominently in the complains. Assuming the reliability issues are also coming under control, this update will go a long way towards addressing these issues.

Just so you realize how lucky you are if you are just now starting with Google App Engine, here are the kind of hoops you had to jump through, in the early days, to process any task that took a significant amount of time. This was done a year before the Cron and Task Queue features were added to GAE.

Another nice addition with SDK 1.4 is that you can now retrieve the source code of your application from Google’s servers. Of course you should never need that if you are rigorous and well-organized… Presumably this is only for Python since in the Java case Google’s servers never see the source code.

The steady progress of the GAE SDK continues.

Partial resource update, one more time

Alex Scordellis has a good blog post about how to handle partial PUT in REST. It starts by explaining why partial PUT is needed in the first place. And then (including in the comments) it runs into the issues this brings and proposes some solutions.

I have bad news. There are many more issues.

Let’s pick a simple example. What does it mean if an element is not present in a partial update? Is it an explicit omission, intended to represent the need to remove this element in the representation? Or does it mean “don’t change its current value”. If the latter, then how do I do removal? Do I need partial DELETE like I have partial PUT? Hopefully not, but then I have to have a mechanism to remove elements as part of a PUT. Empty value? That doesn’t necessarily mean the same thing as an absent element. Nil value? And how do I handle this with JSON?

And how do you deal with repeating elements? If you PUT an element of that type, is it an addition or a replacement? If replacement, which one(s) are you replacing? Or do you force me to PUT the entire list? No matter how long it is? Even if it increases the risk of concurrency issues?

Lots of similar issues. These two are just off the top of my head, memories from hours locked in a room with my HP, IBM, Intel and Microsoft accomplices.

You know what you end up with? You end up with this. Partial Put in WS-RT. I can hear you scream from here.

I am the ghost of dead partial update mechanisms, coming back to haunt you…

As much as WS-* was criticized for re-inventing HTTP, what we see here is HTTP people re-inventing partial resource update mechanisms like those in WSDM, WS-Management and WS-ResourceTransfer. Which is fine, I am in no way advocating that they should re-use these specs.

But let’s realize that while a lot of the complexity in WS-* was unnecessary, some of it actually was a reflection of the complexity of the task at hand. And that complexity doesn’t go away because you get rid of a SOAP envelope and of stupid WS-Addressing headers.

The good news is that we’ve made a lot of the mistakes already and we’ve learned some lessons (see this technical rant, this post-mortem or this experiment). The bad news is that there are plenty of new mistakes waiting to be made.

Good luck. I mean it sincerely.

Filed under API, Everything, IT Systems Mgmt, Manageability, Protocols, REST, Specs, Tech, WS-Management, WS-ResourceTransfer, WS-Transfer, XMLFrag

Cloud management is to traditional IT management what spreadsheets are to calculators

It’s all in the title of the post. An elevator pitch short enough for a 1-story ride. A description for business people. People who don’t want to hear about models, virtualization, blueprints and devops. But people who also don’t want to be insulted with vague claims about “business/IT alignment” and “agility”.

The focus is on repeatability. Repeatability saves work and allows new approaches. I’ve found spreadsheets (and “super-spreadsheets”, i.e. more advanced BI tools) to be a good analogy for business people. Compared to analysts furiously operating calculators, spreadsheets save work and prevent errors. But beyond these cost savings, they allow you to do things you wouldn’t even try to do without them. It’s not just the same process, done faster and cheaper. It’s a more mature way of running your business.

Same with the “Cloud” style of IT management.

Lifting the curtain on PaaS Cloud infrastructure (can you handle the truth?)

The promise of PaaS is that application owners don’t need to worry about the infrastructure that powers the application. They just provide application artifacts (e.g. WAR files) and everything else is taken care of. Backups. Scaling. Infrastructure patching. Network configuration. Geographic distribution. Etc. All these headaches are gone. Just pick from a menu of quality of service options (and the corresponding price list). Make your choice and forget about it.

In theory.

In practice no abstraction is leak-proof and the abstractions provided by PaaS environments are even more porous than average. The first goal of PaaS providers should be to shore them up, in order to deliver on the PaaS value proposition of simplification. But at some point you also have to acknowledge that there are some irreducible leaks and take pragmatic steps to help application administrators deal with them. The worst thing you can do is have application owners suffer from a leaky abstraction and refuse to even acknowledge it because it breaks your nice mental model.

Google App Engine (GAE) gives us a nice and simple example. When you first deploy an application on GAE, it is deployed as just one instance. As traffic increases, a second instance comes up to handle the load. Then a third. If traffic decreases, one instance may disappear. Or one of them may just go away for no reason (that you’re aware of).

It would be nice if you could deploy your application on what looks like a single, infinitely scalable, machine and not ever have to worry about horizontal scale-out. But that’s just not possible (at a reasonable cost) so Google doesn’t try particularly hard to hide the fact that many instances can be involved. You can choose to ignore that fact and your application will still work. But you’ll notice that some requests take a lot more time to complete than others (which is typically the case for the first request to hit a new instance). And some requests will find an empty local cache even though your application has had uninterrupted traffic. If you choose to live with the “one infinitely scalable machine” simplification, these are inexplicable and unpredictable events.

Last week, as part of the release of the GAE SDK 1.3.8, Google went one step further in acknowledging that several instances can serve your application, and helping you deal with it. They now give you a console (pictured below) which shows the instances currently serving your application.

I am very glad that they added this console, because it clearly puts on the table the question of how much your PaaS provider should open the kimono. What’s the right amount of visibility, somewhere between “one infinitely scalable computer” and giving you fan speeds and CPU temperature?

I don’t know what the answer is, but unfortunately I am pretty sure this console is not it. It is supposed to be useful “in debugging your application and also understanding its performance characteristics“. Hmm, how so exactly? Not only is this console very simple, it’s almost useless. Let me enumerate the ways.

Misleading

Actually it’s worse than useless, it’s misleading. As we can see on the screen shot, two of the instances saw no traffic during the collection period (which, BTW, we don’t know the length of), while the third one did all the work. At the top, we see an “average latency” value. Averaging latency across instances is meaningless if you don’t weight it properly. In this case, all the requests went to the instance that had an average latency of 1709ms, but apparently the overall average latency of the application is 569.7ms (yes, that’s 1709/3). Swell.

No instance identification

What happens when the console is refreshed? Maybe there will only be two instances. How do I know which one went away? Or say there are still three, how do I know these are the same three? For all I know it could be one old instance and two new ones. The single most important data point (from the application administrator’s perspective) is when a new instance comes up. I have no way, in this UI, to know reliably when that happens: no instance identification, no indication of the age of an instance.

Average memory

So we get the average memory per instance. What are we supposed to do with that information? What’s a good number, what’s a bad number? How much memory is available? Is my app memory-bound, CPU-bound or IO-bound on this instance?

Configuration management

As I have described before, change and configuration management in a PaaS setting is a thorny problem. This console doesn’t tackle it. Nowhere does it say which version of the GAE platform each instance is running. Google announces GAE SDK releases (the bits you download), but these releases are mostly made of new platform features, so they imply a corresponding update to Google’s servers. That can’t happen instantly, there must be some kind of roll-out (whether the instances can be hot-patched or need to be recycled). Which means that the instances of my application are transitioned from one platform version to another (and presumably that at a given point in time all the instances of my application may not be using the same platform version). Maybe that’s the source of my problem. Wouldn’t it be nice if I knew which platform version an instance runs? Wouldn’t it be nice if my log files included that? Wouldn’t it be nice if I could request an app to run on a specific platform version for debugging purpose? Sure, in theory all the upgrades are backward-compatible, so it “shouldn’t matter”. But as explained above, “the worst thing you can do is have application owners suffer from a leaky abstraction and refuse to even acknowledge it“.

OK, so the instance monitoring console Google just rolled out is seriously lacking. As is too often the case with IT monitoring systems, it reports what is convenient to collect, not what is useful. I’m sure they’ll fix it over time. What this console does well (and really the main point of this blog) is illustrate the challenge of how much information about the underlying infrastructure should be surfaced.

Surface too little and you leave application administrators powerless. Surface more data but no control and you’ll leave them frustrated. Surface some controls (e.g. a way to configure the scaling out strategy) and you’ve taken away some of the PaaS simplicity and also added constraints to your infrastructure management strategy, making it potentially less efficient. If you go down that route, you can end up with the other flavor of PaaS, the IaaS-based PaaS in which you have an automated way to create a deployment but what you hand back to the application administrator is a set of VMs to manage.

That IaaS-centric PaaS is a well-understood beast, to which many existing tools and management practices can be applied. The “pure PaaS” approach pioneered by GAE is much more of a terra incognita from a management perspective. I don’t know, for example, whether exposing the platform version of each instance, as described above, is a good idea. How leaky is the “platform upgrades are always backward-compatible” assumption? Google, and others, are experimenting with the right abstraction level, APIs, tools, and processes to expose to application administrators. That’s how we’ll find out.

Redeeming the service description document

A bicycle is a convenient way to go buy cigarettes. Until one day you realize that buying cigarettes is a bad idea. At which point you throw away your bicycle.

Sounds illogical? Well, that’s pretty much what the industry has done with service descriptions. It went this way: people used WSDL (and stub generation tools built around it) to build distributed applications that shared too much. Some people eventually realized that was a bad approach. So they threw out the whole idea of a service description. And now, in the age of APIs, we are no more advanced than we were 15 years ago in terms of documenting application contracts. It’s tragic.

The main fallacies involved in this stagnation are:

- Assuming that service descriptions are meant to auto-generate all-encompassing program stubs,

- Looking for the One True Description for a given service,

- Automatically validating messages based on the service description.

I’ll leave the first one aside, it’s been widely covered. Let’s drill in a bit into the other two.

There is NOT One True Description for a given service

Many years ago, in the same galaxy where we live today (only a few miles from here, actually), was a development team which had to implement a web service for a specific WSDL. They fed the WSDL to their SOAP stack. This was back in the days when WSDL interoperability was a “promise” in the “political campaign” sense of the term so of course it didn’t work. As a result, they gave up on their SOAP stack and implemented the service as a servlet. Which, for a team new to XML, meant a long delay and countless bugs. I’ll always remember the look on the dev lead’s face when I showed him how 2 minutes and a text editor were all you needed to turn the offending WSDL in to a completely equivalent WSDL (from the point of view of on-the-wire messages) that their toolkit would accept.

(I forgot what the exact issue was, maybe having operations with different exchange patterns within the same PortType; or maybe it used an XSD construct not supported by the toolkit, and it was just a matter of removing this constraint and moving it from schema to code. In any case something that could easily be changed by editing the WSDL and the consumer of the service wouldn’t need to know anything about it.)

A service description is not the literal word of God. That’s true no matter where you get it from (unless it’s hand-delivered by an angel, I guess). Just because adding “?wsdl” to the URL of a Web service returns an XML document doesn’t mean it’s The One True Description for that service. It’s just the most convenient one to generate for the app server on which the service is deployed.

One of the things that most hurts XML as an on-the-wire format is XSD. But not in the sense that “XSD is bad”. Sure, it has plenty of warts, but what really hurts XML is not XSD per se as much as the all-too-common assumption that if you use XML you need to have an XSD for it (see fat-bottomed specs, the key message of which I believe is still true even though SML and SML-IF are now dead).

I’ve had several conversations like this one:

– The best part about using JSON and REST was that we didn’t have to deal with XSD.

– So what do you use as your service contract?

– Nothing. Just a human-readable wiki page.

– If you don’t need a service contract, why did you feel like you had to write an XSD when you were doing XML? Why not have a similar wiki page describing the XML format?

– …

It’s perfectly fine to have service descriptions that are optimized to meet a specific need rather than automatically focusing on syntax validation. Not all consumers of a service contract need to be using the same description. It’s ok to have different service descriptions for different users and/or purposes. Which takes us to the next fallacy. What are service descriptions for if not syntax validation?

A service description does NOT mean you have to validate messages

As helpful as “validation” may seem as a concept, it often boils down to rejecting messages that could otherwise be successfully processed. Which doesn’t sound quite as useful, does it?

There are many other ways in which service descriptions could be useful, but they have been largely neglected because of the focus on syntactic validation and stub generation. Leaving aside development use cases and looking at my area of focus (application management), here are a few use cases for service descriptions:

Creating test messages (aka “synthetic transactions”)

A common practice in application management is to send test messages at regular intervals (often from various locations, e.g. branch offices) to measure the availability and response time of an application from the consumer’s perspective. If a WSDL is available for the service, we use this to generate the skeleton of the test message, and let the admin fill in appropriate values. Rather than a WSDL we’d much rather have a “ready-to-use” (possibly after admin review) test message that would be provided as part of the service description. Especially as it would be defined by the application creator, who presumably knows a lot more about that makes a safe and yet relevant message to send to the application as a ping.

Attaching policies and SLAs

One of the things that WSDLs are often used for, beyond syntax validation and stub generation, is to attach policies and SLAs. For that purpose, you really don’t need the XSD message definition that makes up so much of the WSDL. You really just need a way to identify operations on which to attach policies and SLAs. We could use a much simpler description language than WSDL for this. But if you throw away the very notion of a description language, you’ve thrown away the baby (a classification of the requests processed by the service) along with the bathwater (a syntax validation mechanism).

Governance / versioning

One benefit of having a service description document is that you can see when it changes. Even if you reduce this to a simple binary value (did it change since I last checked, y/n) there’s value in this. Even better if you can introspect the description document to see which requests are affected by the change. And whether the change is backward-compatible. Offering the “before” XSD and the “after” XSD is almost useless for automatic processing. It’s unlikely that some automated XSD inspection can tell me whether I can keep using my previous messages or I need to update them. A simple machine-readable declaration of that fact would be a lot more useful.

I just listed three, but there are other application management use cases, like governance/auditing, that need a service description.

In the SOAP world, we usually make do with WSDL for these tasks, not because it’s the best tool (all we really need is a way to classify requests in “buckets” – call them “operations” if you want – based on the content of the message) but because WSDL is the only understanding that is shared between the caller and the application.

By now some of you may have already drafted in your head the comment you are going to post explaining why this is not a problem if people just use REST. And it’s true that with REST there is a default categorization of incoming messages. A simple matrix with the various verbs as columns (GET, POST…) and the various resource types as rows. Each message can be unambiguously placed in one cell of this matrix, so I don’t need a service description document to have a request classification on which I can attach SLAs and policies. Granted, but keep these three things in mind:

- This default categorization by verb and resource type can be a quite granular. Typically you wouldn’t have that many different policies on your application. So someone who understands the application still needs to group the invocations into message categories at the right level of granularity.

- This matrix is only meaningful for the subset of “RESTful” apps that are truly… RESTful. Not for all the apps that use REST where it’s an easy mental mapping but then define resource types called “operations” or “actions” that are just a REST veneer over RPC.

- Even if using REST was a silver bullet that eliminated the need for service definitions, as an application management vendor I don’t get to pick the applications I manage. I have to have a solution for what customers actually do. If I restricted myself to only managing RESTful applications, I’d shrink my addressable market by a few orders of magnitude. I don’t have an MBA, but it sounds like a bad idea.

This is not a SOAP versus REST post. This is not a XML versus JSON post. This is not a WSDL versus WADL post. This is just a post lamenting the fact that the industry seems to have either boxed service definitions into a very limited use case, or given up on them altogether. It I wasn’t recovering from standards burnout, I’d look into a versatile mechanism for describing application services in a way that is geared towards message classification more than validation.

Comments Off on Redeeming the service description document

Filed under API, Application Mgmt, Everything, Governance, IT Systems Mgmt, Manageability, Mashup, Mgmt integration, Middleware, Modeling, Protocols, REST, SML, SOA, Specs, Standards

Exalogic, EC2-on-OVM, Oracle Linux: The Oracle Open World early recap

Among all the announcements at Oracle Open World so far, here is a summary of those I was the most impatient to blog about.

Oracle Exalogic Elastic Cloud

This was the largest part of Larry’s keynote, he called it “one big honkin’ cloud”. An impressive piece of hardware (360 2.93GHz cores, 2.8TB of RAM, 960GB SSD, 40TB disk for one full rack) with excellent InfiniBand connectivity between the nodes. And you can extend the InfiniBand connectivity to other Exalogic and/or Exadata racks. The whole packaged is optimized for the Oracle Fusion Middleware stack (WebLogic, Coherence…) and managed by Oracle Enterprise Manager.

This is really just the start of a long linage of optimized, pre-packaged, simplified (for application administrators and infrastructure administrators) application platforms. Management will play a central role and I am very excited about everything Enterprise Manager can and will bring to it.

If “Exalogic Elastic Cloud” is too taxing to say, you can shorten it to “Exalogic” or even just “EL”. Please, just don’t call it “E2C”. We don’t want to get into a trademark fight with our good friends at Amazon, especially since the next important announcement is…

Run certified Oracle software on OVM at Amazon

Oracle and Amazon have announced that AWS will offer virtual machines that run on top of OVM (Oracle’s hypervisor). Many Oracle products have been certified in this configuration; AMIs will soon be available. There is a joint support process in place between Amazon and Oracle. The virtual machines use hard partitioning and the licensing rules are the same as those that apply if you use OVM and hard partitioning in your own datacenter. You can transfer licenses between AWS and your data center.

One interesting aspect is that there is no extra fee on Amazon’s part for this. Which means that you can run an EC2 VM with Oracle Linux on OVM (an Oracle-tested combination) for the same price (without Oracle Linux support) as some other Linux distribution (also without support) on Amazon’s flavor of Xen. And install any software, including non-Oracle, on this VM. This is not the primary intent of this partnership, but I am curious to see if some people will take advantage of it.

Speaking of Oracle Linux, the next announcement is…

The Unbreakable Enterprise Kernel for Oracle Linux

In addition to the RedHat-compatible kernel that Oracle has been providing for a while (and will keep supporting), Oracle will also offer its own Linux kernel. I am not enough of a Linux geek to get teary-eyed about the birth announcement of a new kernel, but here is why I think this is an important milestone. The stratification of the application runtime stack is largely a relic of the past, when each layer had enough innovation to justify combining them as you see fit. Nowadays, the innovation is not in the hypervisor, in the OS or in the JVM as much as it is in how effectively they all combine. JRockit Virtual Edition is a clear indicator of things to come. Application runtimes will eventually be highly integrated and optimized. No more scheduler on top of a scheduler on top of a scheduler. If you squint, you’ll be able to recognize aspects of a hypervisor here, aspects of an OS there and aspects of a JVM somewhere else. But it will be mostly of interest to historians.

Oracle has by far the most expertise in JVMs and over the years has built a considerable amount of expertise in hypervisors. With the addition of Solaris and this new milestone in Linux access and expertise, what we are seeing is the emergence of a company for which there will be no technical barrier to innovation on making all these pieces work efficiently together. And, unlike many competitors who derive most of their revenues from parts of this infrastructure, no revenue-protection handcuffs hampering innovation either.

Fusion Apps

Larry also talked about Fusion Apps, but I believe he plans to spend more time on this during his Wednesday keynote, so I’ll leave this topic aside for now. Just remember that Enterprise Manager loves Fusion Apps.

And what about Enterprise Manager?

We don’t have many attention-grabbing Enterprise Manager product announcements at Oracle Open World 2010, because we had a big launch of Enterprise Manager 11g earlier this year, in which a lot of new features were released. Technically these are not Oracle Open World news anymore, but many attendees have not seen them yet so we are busy giving demos, hands-on labs and presentations. From an application and middleware perspective, we focus on end-to-end management (e.g. from user experience to BTM to SOA management to Java diagnostic to SQL) for faster resolution, application lifecycle integration (provisioning, configuration management, testing) for lower TCO and unified coverage of all the key parts of the Oracle portfolio for productivity and reliability. We are also sharing some plans and our vision on topics such as application management, Cloud, support integration etc. But in this post, I have chosen to only focus on new product announcements. Things that were not publicly known 48 hours ago. I am also not covering JavaOne (see Alexis). There is just too much going on this week…

Just kidding, we like it this way. And so do the customers I’ve been talking to.

Comments Off on Exalogic, EC2-on-OVM, Oracle Linux: The Oracle Open World early recap

Filed under Amazon, Application Mgmt, Cloud Computing, Conference, Everything, Linux, Manageability, Middleware, Open source, Oracle, Oracle Open World, OVM, Tech, Trade show, Utility computing, Virtualization, Xen

The PaaS Lament: In the Cloud, application administrators should administrate applications

Some organizations just have “systems administrators” in charges of their applications. Others call out an “application administrator” role but it is usually overloaded: it doesn’t separate the application platform administrator from the true application administrator. The first one is in charge of the application runtime infrastructure (e.g. the application server, SOA tools, MDM, IdM, message bus, etc). The second is in charge of the applications themselves (e.g. Java applications and the various artifacts that are used to customize the middleware stack to serve the application).

In effect, I am describing something close to the split between the DBA and the application administrators. The first step is to turn this duo (app admin, DBA) into a triplet (app admin, platform admin, DBA). That would be progress, but such a triplet is not actually what I am really after as it is too strongly tied to a traditional 3-tier architecture. What we really need is a first-order separation between the application administrator and the infrastructure administrators (not the plural). And then, if needed, a second-order split between a handful of different infrastructure administrators, one of which may be a DBA (or a DBA++, having expanded to all data storage services, not just relational), another of which may be an application platform administrator.

There are two reasons for the current unfortunate amalgam of the “application administrator” and “application platform administrator” roles. A bad one and a good one.

The bad reason is a shortcomings of the majority of middleware products. While they generally do a good job on performance, reliability and developer productivity, they generally do a poor job at providing a clean separation of the performance/administration functions that are relevant to the runtime and those that are relevant to the deployed applications. Their usual role definitions are more structured along the lines of what actions you can perform rather than on what entities you can perform them. From a runtime perspective, the applications are not well isolated from one another either, which means that in real life you have to consider the entire system (the middleware and all deployed applications) if you want to make changes in a safe way.

The good reason for the current lack of separation between application administrators and middleware administrators is that middleware products have generally done a good job of supporting development innovation and optimization. Frameworks appear and evolve to respond to the challenges encountered by developers. Knobs and dials are exposed which allow heavy customization of the runtime to meet the performance and feature needs of a specific application. With developers driving what middleware is used and how it is used, it’s a natural consequence that the middleware is managed in tight correlation with how the application is managed.

Just like there is tension between DBAs and the “application people” (application administrators and/or developers), there is an inherent tension in the split I am advocating between application management and application platform management. The tension flows from the previous paragraph (the “good reason” for the current amalgam): a split between application administrators and application platform administrators would have the downside of dampening application platform innovation. Or rather it redirects it, in a mutation not unlike the move from artisans to industry. Rather than focusing on highly-specialized frameworks and highly-tuned runtimes, the application platform innovation is redirected towards the goals of extreme cost efficiency, high reliability, consistent security and scalability-by-default. These become the main objectives of the application platform administrator. In that perspective, the focus of the application architect and the application administrator needs to switch from taking advantage of the customizability of the runtime to optimize local-node performance towards taking advantage of the dynamism of the application platform to optimize for scalability and economy.

Innovation in terms of new frameworks and programming models takes a hit in that model, but there are ways to compensate. The services offered by the platform can be at different levels of generality. The more generic ones can be used to host innovative application frameworks and tools. For example, a highly-specialized service like an identity management system is hard to use for another purpose, but on the other hand a JVM can be used to host not just business applications but also platform-like things like Hadoop. They can run in the “application space” until they are mature enough to be incorporated in the “application platform space” and become the responsibility of the application platform administrator.

The need to keep a door open for innovation is part of why, as much as I believe in PaaS, I don’t think IaaS is going away anytime soon. Not only do we need VMs for backward-looking legacy apps, we also need polyvalent platforms, like a VM, for forward-looking purposes, to allow developers to influence platform innovation, based on their needs and ideas.

Forget the guillotine, maybe I should carry an axe around. That may help get the point across, that I want to slice application administrators in two, head to toe. PaaS is not a question of runtime. It’s a question of administrative roles.

Comments Off on The PaaS Lament: In the Cloud, application administrators should administrate applications

Filed under Application Mgmt, Cloud Computing, Everything, IT Systems Mgmt, Manageability, Mgmt integration, Middleware, PaaS, Utility computing, Virtualization

The necessity of PaaS: Will Microsoft be the Singapore of Cloud Computing?

From ancient Mesopotamia to, more recently, Holland, Switzerland, Japan, Singapore and Korea, the success of many societies has been in part credited to their lack of natural resources. The theory being that it motivated them to rely on human capital, commerce and innovation rather than resource extraction. This approach eventually put them ahead of their better-endowed neighbors.

A similar dynamic may well propel Microsoft ahead in PaaS (Platform as a Service): IaaS with Windows is so painful that it may force Microsoft to focus on PaaS. The motivation is strong to “go up the stack” when the alternative is to cultivate the arid land of Windows-based IaaS.

I should disclose that I work for one of Microsoft’s main competitors, Oracle (though this blog only represents personal opinions), and that I am not an expert Windows system administrator. But I have enough experience to have seen some of the many reasons why Windows feels like a much less IaaS-friendly environment than Linux: e.g. the lack of SSH, the cumbersomeness of RDP, the constraints of the Windows license enforcement system, the Windows update mechanism, the immaturity of scripting, the difficulty of managing Windows from non-Windows machines (despite WS-Management), etc. For a simple illustration, go to EC2 and compare, between a Windows AMI and a Linux AMI, the steps (and time) needed to get from selecting an image to the point where you’re logged in and in control of a VM. And if you think that’s bad, things get even worse when we’re not just talking about a few long-lived Windows server instances in the Cloud but a highly dynamic environment in which all steps have to be automated and repeatable.

I am not saying that there aren’t ways around all this, just like it’s not impossible to grow grapes in Holland. It’s just usually not worth the effort. This recent post by RighScale illustrates both how hard it is but also that it is possible if you’re determined. The question is what benefits you get from Windows guests in IaaS and whether they justify the extra work. And also the additional license fee (while many of the issues are technical, others stem more from Microsoft’s refusal to acknowledge that the OS is a commodity). [Side note: this discussion is about Windows as a guest OS and not about the comparative virtues of Hyper-V, Xen-based hypervisors and VMWare.]

Under the DSI banner, Microsoft has been working for a while on improving the management/automation infrastructure for Windows, with tools like PowerShell (which I like a lot). These efforts pre-date the Cloud wave but definitely help Windows try to hold it own on the IaaS battleground. Still, it’s an uphill battle compared with Linux. So it makes perfect sense for Microsoft to move the battle to PaaS.

Just like commerce and innovation will, in the long term, bring more prosperity than focusing on mining and agriculture, PaaS will, in the long term, yield more benefits than IaaS. Even though it’s harder at first. That’s the good news for Microsoft.

On the other hand, lack of natural resources is not a guarantee of success either (as many poor desertic countries can testify) and Microsoft will have to fight to be successful in PaaS. But the work on Azure and many research efforts, like the “next-generation programming model for the cloud” (codename “Orleans”) that Mary Jo Foley revealed today, indicate that they are taking it very seriously. Their approach is not restricted by a VM-centric vision, which is often tempting for hypervisor and OS vendors. Microsoft’s move to PaaS is also facilitated by the fact that, while system administration and automation may not be a strength, development tools and application platforms are.

The forward-compatible Cloud will soon overshadow the backward-compatible Cloud and I expect Microsoft to play a role in it. They have to.

Updates on Microsoft Oslo and “SSH on Windows”

I’ve been tracking the modeling technology previously known as “Microsoft Oslo” with a sympathetic eye for the almost three years since it’s been introduced. I look at it from the perspective of model-driven IT management but the news hadn’t been good on that front lately (except for Douglas Purdy’s encouraging hint).

The prospects got even bleaker today, at least according to the usually-well-informed Mary Jo Foley, who writes: “Multiple contacts of mine are telling me that Microsoft has decided to shelve Quadrant and ‘refocus’ M.” Is “M” the end of the SDM/SML/M model-driven management approach at Microsoft? Or is the “refocus” a hint that M is returning “home” to address IT management use cases? Time (or Doug) will tell…

While we’re talking about Microsoft and IT automation, I have one piece of free advice for the Microsofties: people *really* want to SSH into Windows servers. Here’s how I know. This blog rarely talks about Microsoft but over the course of two successive weekends over a year ago I toyed with ways to remotely manage Windows machines using publicly documented protocols. In effect, showing what to send on the wire (from Linux or any platform) to leverage the SOAP-based management capabilities in recent versions of Windows. To my surprise, these posts (1, 2, 3) still draw a disproportionate amount of traffic. And whenever I look at my httpd logs, I can count on seeing search engine queries related to “windows native ssh” or similar keywords.

If heterogeneous Cloud is something Microsoft cares about they need to better leverage the potential of the PowerShell Remoting Protocol. They can release open-source Python, Java and Ruby client-side libraries. Alternatively, they can drastically simplify the protocol, rather than its current “binary over SOAP” (you read this right) incarnation. Because the poor Kridek who is looking for the “WSDL for WinRM / Remote Powershell” is in for a nasty surprise if he finds it and thinks he’ll get a ready-to-use stub out of it.

That being said, a brave developer willing to suck it up and create such a Python/Ruby/Java library would probably make some people very grateful.

Filed under Application Mgmt, Automation, Everything, Implementation, IT Systems Mgmt, Manageability, Mgmt integration, Microsoft, Modeling, Oslo, Protocols, SML, SOAP, Specs, Tech, WS-Management

PaaS portability challenges and the VMforce example

The VMforce announcement is a great step for SalesForce, in large part because it lets them address a recurring concern about the force.com PaaS offering: the lack of portability of Apex applications. Now they can be written using Java and Spring instead. A great illustration of how painful this issue was for SalesForce is to see the contortions that Peter Coffee goes through just to acknowledge it: “On the downside, a project might be delayed by debates—some in good faith, others driven by vendor FUD—over the perception of platform lock-in. Political barriers, far more than technical barriers, have likely delayed many organizations’ return on the advantages of the cloud”. The issue is not lock-in it’s the potential delays that may come from the perception of lock-in. Poetic.

Similarly, portability between clouds is also a big theme in Steve Herrod’s blog covering VMforce as illustrated by the figure below. The message is that “write once run anywhere” is coming to the Cloud.

Because this is such a big part of the VMforce value proposition, both from the SalesForce and the VMWare/SpringSource side (as well as for PaaS in general), it’s worth looking at the portability aspect in more details. At least to the extent that we can do so based on this pre-announcement (VMforce is not open for developers yet). And while I am taking VMforce as an example, all the considerations below apply to any enterprise PaaS offering. VMforce just happens to be one of the brave pioneers, willing to take a first step into the jungle.

Beyond the use of Java as a programming language and Spring as a framework, the portability also comes from the supporting tools. This is something I did not cover in my initial analysis of VMforce but that Michael Cote covers well on his blog and Carl Brooks in his comment. Unlike the more general considerations in my previous post, these matters of tooling are hard to discuss until the tools are actually out. We can describe what they “could”, “should” and “would” do all day long, but in the end we need to look at the application in practice and see what, if anything, needs to change when I redirect my deployment target from one cloud to the other. As SalesForce’s Umit Yalcinalp commented, “the details are going to be forthcoming in the coming months and it is too early to speculate”.

So rather than speculating on what VMforce tooling will do, I’ll describe what portability questions any PaaS platform would have to address (or explicitly decline to address).

Code portability

That’s the easiest to address. Thanks to Java, the runtime portability problem for the core language is pretty much solved. Still, moving applications around require changes to way the application communicates with its infrastructure. Can your libraries and frameworks for data access and identity, for example, successfully encapsulate and hide the different kinds of data/identity stores behind them? Even when the stores are functionally equivalent (e.g. SQL, LDAP), they may have operational differences that matter for an enterprise application. Especially if the database is delivered (and paid for) as a service. I may well design my application differently depending on whether I am charged by the amount of data in the DB, by the number of requests to the DB, by the quantity of app-to-DB traffic or by the total processing time of my requests in the DB. Apparently force.com considers the number of “database objects” in its pricing plans and going over 200 pushes you from the “Enterprise” version to the more expensive “Unlimited” version. If I run against my local relational database I don’t think twice about having 201 “database objects”. But if I run in force.com and I otherwise can live within the limits of the “Enterprise” version I’d probably be tempted to slightly alter my data model to fit under 200 objects. The example is borderline silly, but the underlying truth is that not all differences in application infrastructure can be automatically encapsulated by libraries.

While code portability is a solvable problem for a reasonably large set of use cases, things get hairier for the more demanding applications. A large part of the PaaS value proposition is contingent on the willingness to give up some low-level optimizations. This, and harder portability in some cases, may just have to be part of the cost of running demanding applications in a PaaS environment. Or just keep these off PaaS for now. This is part of the backward-compatible versus forward compatible Cloud dilemma.

Data portability

I have covered data portability in the previous entry, in response to Steve Herrod’s comment that “you should be able to extract the code from the cloud it currently runs in and move it, along with its data, to another cloud choice”. Your data in the force.com database can already be moved somewhere else… as long as you’re willing to write code to get it and perform any needed transformation. In theory, any data that you can read is data that you can move (thus fulfilling Steve’s promise). The question is at what cost. Presumably Steve is referring to data migration tools that VMWare will build (or acquire) and make part of its cloud enablement platform. Another way in which VMWare is trying to assemble a more complete middleware portfolio (see Oracle ODI for an example of a complete data integration offering, which goes far beyond ETL).

There is a subtle difference between the intrinsic portability of Java (which will run in any JRE, modulo JDK version) and the extrinsic portability of data which can in theory be moved anywhere but each place you move it to may require a different process. A car and an oak armoire are both “portable”, but one is designed for moving while the other will only move if you bring a truck and two strong guys.

Application service portability

I covered this in my previous entry and Bob Warfield summarized it as “take advantage of all those juicy services and it will be hard to back out of that platform, Java or no Java”. He is referring to all the platform services (search, reporting, mobile, integration, BPM, IdM, administration) that make a large part of the force.com value proposition. They won’t be waiting for you in your private cloud (though some may be remotely invocable, depending on how SalesForce wants to play its cards). Applications that depend on them will have to be changed, at least until we have standards interfaces for all these services (don’t hold your breath).

Management portability

Even if you can seamlessly migrate your application and your data from your internal servers to force.com, what do you think is going to happen to your management console, especially if it uses operating system agents? These agents are not coming along for the ride, that’s for sure. Are you going to tell your administrators that rather than having a centralized configuration/monitoring/event console they are going to have to look at cute “monitoring” web pages for each application? And all the transaction tracing, event correlation, configuration policy and end-user monitoring features they were relying on are unfortunate victims of the relentless march of progress? Good luck with that sale.

VMWare’s answer will probably be that they will eventually provide you with all the management capabilities that you need. And it’s a fair one, along the lines of the “Application-to-Disk Management” message at the recent launch of Oracle Enterprise Manager 11G. With the difference that EM is not the only way to manage a top-to-bottom Oracle stack, just the one that we think is the best. BMC and HP aren’t locked out.

VMWare and SpringSource (+Hyperic) could indeed theoretically assemble a full-fledged management solution. But this doesn’t happen overnight, even with acquisitions as I know from experience both at HP Software and currently at Oracle. Integration (of management domains across the stack, of acquired application management products, of support data/services from oracle.com) is one of the main advances in Enterprise Manager 11G and it took work.

And even then, this leads to the next logical question. If you can move from cloud to cloud but you are forced to use VMWare development, deployment and management tools, haven’t you traded one lock-in problem for another?

Not to mention that your portability between clouds, if it depends on VMWare tools, is limited to VMWare-powered clouds (private or public). In effect, there are now three levels of portability:

- not portable (only runs on VMforce)

- portable to any cloud (public or private) built using VMWare infrastructure

- portable to any Java/Spring Cloud platform

Is your application portable the way cash is portable, or the way a gift card is portable (across stores of a retail chain)?

If this reminds you of the java portability debates of the early days of Enterprise Java that’s no surprise. Remember, we’re replaying the tape.

Waiting for events (in Cloud APIs)

Events/alerts/notifications have been a central concept in IT management at least since the first SNMP trap was emitted, and probably even long before that. And yet they are curiously absent from all the Cloud management APIs/protocols. If you think that’s because “THE CLOUD CHANGES EVERYTHING” then you may have to think again. Over the last few days, two of the most experienced practitioners of Cloud computing pointed out that this omission is a real pain in the neck. RightScale’s Thorsten von Eicken was first to request “an event based interface instead of a request-reply based interface”, pointing out that “we run a good number of machines that do nothing but chew up 100% cpu polling EC2 to detect changes”. George Reese seconded and started to sketch a solution. And while these blog posts gave the issue increased visibility recently, it has been a recurring topic on the AWS Forum and other similar discussion boards for quite some time. For example, in this thread going back to 2006, an Amazon employee wrote that “this is a feature we’ve discussed recently and we’re looking at options” (incidentally, I see a post by Thorsten in that old thread). We’re still waiting.

Let’s look at what it would take to define such a feature.

I have some experience with events for IT management, having been involved in the WS-Notification family of specifications and having co-chaired the OASIS technical committee that standardized them. This post is not about foisting WS-Notification on Cloud APIs, but just about surfacing some of the questions that come up when you try to standardize such a mechanism. While the main use cases for WS-Notification came from IT (and Grid) management, it was supposed to be a generic mechanism. A Cloud-centric eventing protocol can be made simpler by focusing on fewer use cases (Cloud scenarios only). In addition, WS-Notification was marred by the complexity-is-a-sign-of-greatness spirit of the time . On this too, a Cloud eventing protocol could improve things by keeping IBM at bay simplicity in mind.

Types of event

When you pull the state of a resource to see if anything changed, you don’t have to tell the provider what kind of change you are interested in. If, on the other hand, you want the provider to notify you, then they need to know what you care about. You may not want to be notified on every single change in the resource state. How do you describe the changes you care about? Is there an agreed-upon set of states for the resource and you are only notified on state transitions? Can you indicate the minimum severity level for an event to be emitted? Who determines the severity of an event? Or do you get to specify what fields in the resource state you want to watch? What about numeric values for which you may not want to be notified of every change but only when a threshold is crossed? Do you get to specify a query and get notified whenever the query result changes? In WS-Notification some of this is handled by WS-Topics which I still like conceptually (I co-edited it) but is too complex for the task at hand.

Event formats

What format are the events serialized in? How is the even metadata captured (e.g. time stamp of observation, which may not be the same as the time at which the notification message was sent)? If the event payload is a representation of the new state of the resource, does it indicate what field changes (and what the old value was)? How do you keep event payloads consistent with the resource representation in the request/response interactions? If many events occur near the same time, can you group them in one notification message for better scalability?

Subscription creation

Presumably you need a subscription mechanism. Is the subscription set in stone when the resource is created? Or can you come later and subscribe? If subscription is an operation on the resource itself, how do you subscribe for events on something that doesn’t exist yet (e.g. “create a VM and notify me once it’s started”)? Do you get to set subscriptions on a per-resource-basis? Or is this a global setting for all the resources that you own? Can you have two different subscriptions on the same resource (e.g. a “critical events only” subscription that exist throughout the life of the resource, plus a “lots of events please” subscription that you keep for a few hours while troubleshooting)?

Subscription management

Do you get to come back and update/pause/delete a subscription? Do you get to change what filter the subscription carries? Or is it set in stone until the subscription expires? Can you change the delivery endpoint? What if events fail to be delivered? Does the provider cancel your subscription? After how many failures? Does it just pause it for a few hours? Keep trying?

Subscription expiration

Who sets the expiration period? The subscriber? Can the provider set a max duration? Do you get a warning message before the subscription expires? Can you renew a subscription or do you have to create a new one? Do you get a message telling you that it has expired? Where are these subscription-lifecycle messages sent? To the same endpoint as the regular messages? What if your subscription is being killed because your deliver endpoint is down, clearly it makes no sense to send the warning message to that same endpoint. Do you provide a separate “subscription management” endpoint (different from the event delivery endpoint) when you subscribe? Alternatively, does an email message get sent to the registered user who set the subscription?

Delivery reliability

How reliable do you want the notifications to be? Should the emitter retry until they’ve received a confirmation? How long do they keep messages that can’t be delivered? Some may have a very short shelf life while others are still useful weeks later. If you don’t have a reliable mechanism but you really “need to know about a lost server within a minute of it disappearing” (the example Georges gives) then in reality you may still have to poll just to make sure that an event wasn’t lost. If you haven’t received an event in a while, how can you test if the subscription is still working? Should subscriptions send a heartbeat message once a while?

Delivery mechanism

How do you deliver notifications? Do you keep HTTP connections open through tricks similar to how self-updating web pages work (e.g. COMET, long polling and soon WebSockets)? Or do you just provide a listener endpoint to which the notifier tries to connect (which, in the case of public cloud deployments, means you need to have a publicly-addressable listener, but hopefully not on the same Cloud infrastructure). Do you use XMPP? AMQP? Email? Can I have you hold my events and let me come pull them?

Security

Do you need to verify the origin of the events you receive? Or do you assume they may be forged and always initiate a connection to the provider to double-check? And on the other side, what are the security requirements for event delivery? If a user looses some of their privileges, do you have to go and cancel the still-active subscriptions that they created?

Throttling

Is there a maximum event rate? Do you get charged for the events the Cloud provider sends you? How do you make sure that someone doesn’t create a subscription pointing to the wrong endpoint (either erroneously or maliciously, e.g. DoS). Do you send a test message at registration asking the delivery endpoint to acknowledge that they indeed want to receive these notifications?

Conclusion